Decoding the Chart GPT Cycle: A Deep Dive into Generative Pre-trained Transformer’s Iterative Course of

Associated Articles: Decoding the Chart GPT Cycle: A Deep Dive into Generative Pre-trained Transformer’s Iterative Course of

Introduction

With nice pleasure, we are going to discover the intriguing matter associated to Decoding the Chart GPT Cycle: A Deep Dive into Generative Pre-trained Transformer’s Iterative Course of. Let’s weave attention-grabbing data and supply recent views to the readers.

Desk of Content material

Decoding the Chart GPT Cycle: A Deep Dive into Generative Pre-trained Transformer’s Iterative Course of

Giant language fashions (LLMs) like Chart GPT, constructed upon the structure of Generative Pre-trained Transformers (GPT), are revolutionizing how we work together with data and know-how. Understanding their internal workings, nonetheless, requires delving past the straightforward person interface and exploring the intricate cycle that powers their seemingly easy technology of textual content, code, and different types of information. This text will dissect the Chart GPT cycle, offering a complete understanding of its constituent phases, their interdependencies, and the elements influencing its general efficiency and effectivity.

1. Information Ingestion and Preprocessing: The Basis of Data

The Chart GPT cycle begins with the essential stage of information ingestion and preprocessing. This includes gathering large datasets from various sources – books, articles, code repositories, web sites, and extra. The size of those datasets is paramount; the extra information, the richer and extra nuanced the mannequin’s understanding of language and its numerous contexts. The standard of the info is equally crucial. Noisy, incomplete, or biased information will inevitably result in a mannequin that displays these flaws in its outputs.

Preprocessing is a multifaceted course of geared toward reworking uncooked information right into a format appropriate for the mannequin. This contains:

- Cleansing: Eradicating irrelevant characters, HTML tags, and different noise.

- Tokenization: Breaking down the textual content into particular person items (tokens), which might be phrases, sub-words, or characters, relying on the mannequin’s design. This course of typically includes methods like Byte Pair Encoding (BPE) to deal with out-of-vocabulary phrases successfully.

- Normalization: Changing textual content to a constant format, equivalent to lowercase, and dealing with variations in spelling and punctuation.

- Information Filtering: Eradicating duplicate entries, irrelevant content material, or information that does not align with the mannequin’s supposed objective.

The effectivity and thoroughness of this preprocessing stage considerably impression the next steps and the general efficiency of the mannequin. A well-preprocessed dataset ensures that the mannequin learns from high-quality data, minimizing the chance of producing inaccurate or biased outputs.

2. Mannequin Coaching: Studying the Patterns of Language

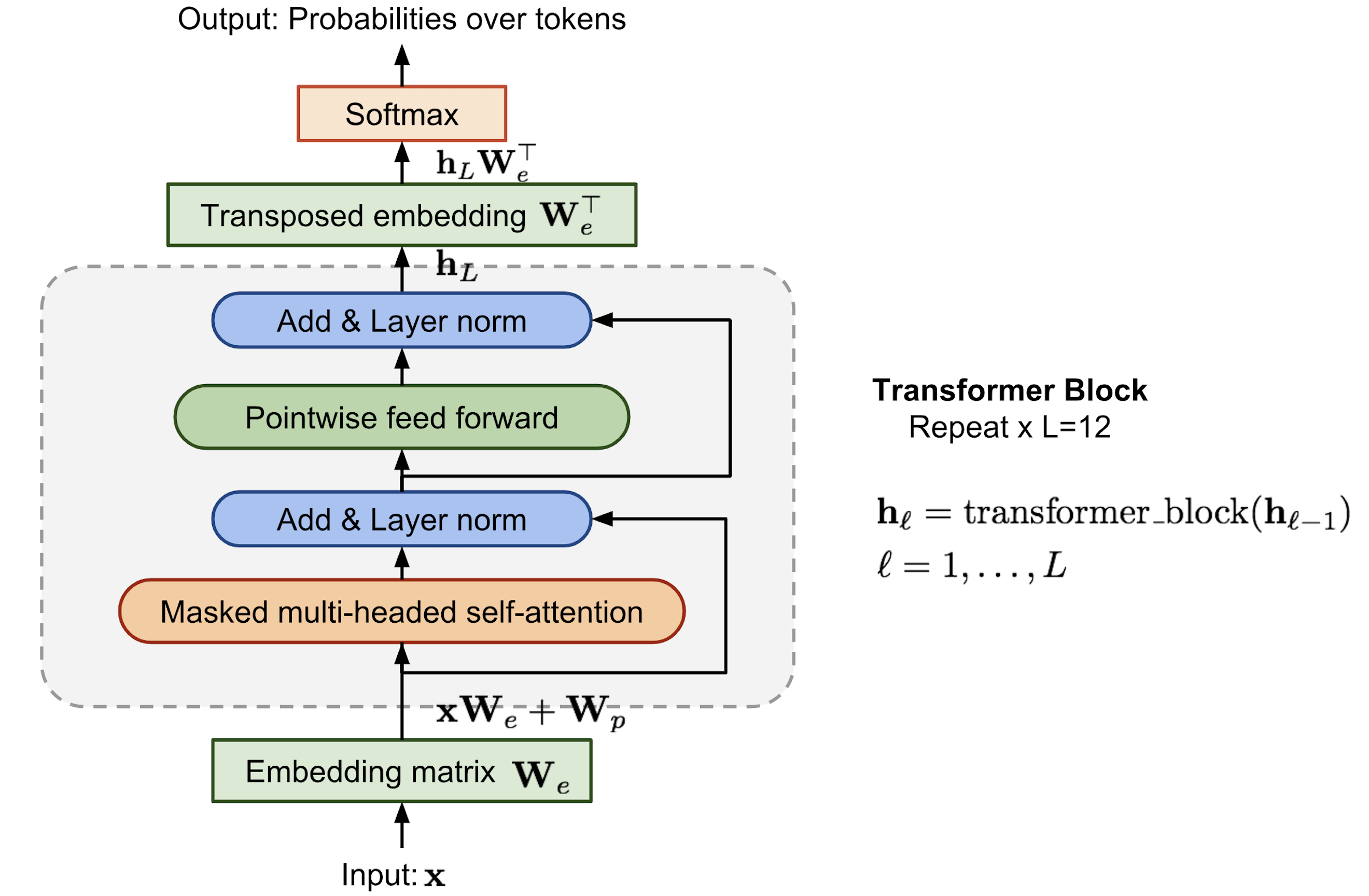

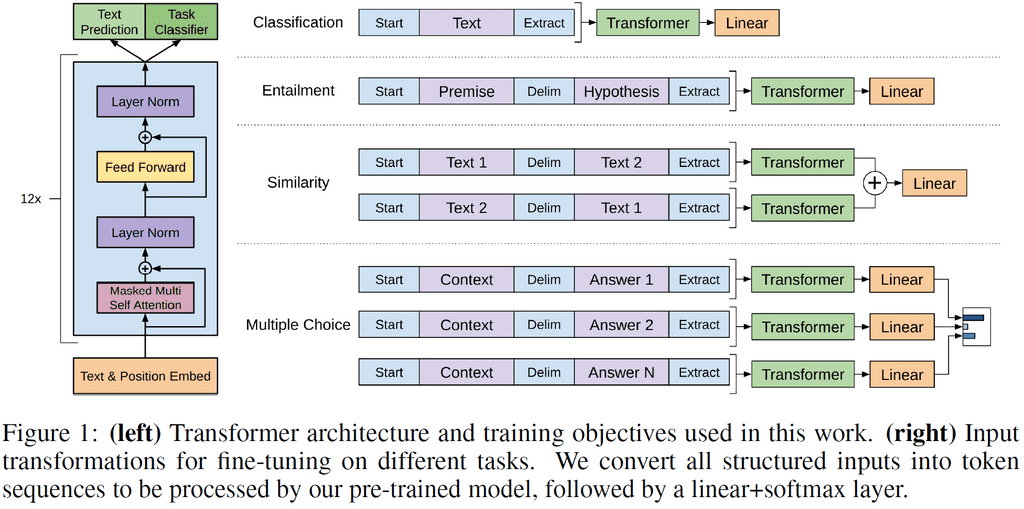

As soon as the info is ready, the core of the Chart GPT cycle begins: mannequin coaching. This stage includes feeding the preprocessed information to the GPT structure, a sort of transformer community identified for its skill to course of sequential information successfully. The coaching course of includes adjusting the mannequin’s inside parameters (weights and biases) to reduce the distinction between its predicted outputs and the precise information. That is sometimes achieved utilizing a way referred to as backpropagation, which iteratively refines the mannequin’s understanding of the underlying patterns and relationships inside the information.

A number of essential elements outline the coaching course of:

- Goal Perform: Defines the particular purpose of the coaching course of. Generally, this includes maximizing the chance of producing the subsequent token in a sequence given the previous tokens.

- Optimizer: An algorithm that updates the mannequin’s parameters based mostly on the calculated gradients. Fashionable optimizers embody Adam and SGD.

- Hyperparameter Tuning: Experimenting with totally different settings (studying price, batch dimension, variety of epochs) to optimize the mannequin’s efficiency. That is an iterative course of that requires vital computational sources and experience.

- {Hardware} Infrastructure: Coaching massive language fashions requires substantial computational energy, typically using specialised {hardware} like GPUs and TPUs.

The coaching course of is computationally intensive and time-consuming, typically requiring weeks and even months to finish. The result is a extremely parameterized mannequin able to producing coherent and contextually related textual content.

3. Immediate Engineering and Era: Guiding the Mannequin’s Creativity

With the skilled mannequin in place, the Chart GPT cycle enters the stage of immediate engineering and technology. That is the place the person interacts with the mannequin, offering a immediate – a bit of textual content that serves as a place to begin for the technology course of. The effectiveness of the generated output is closely reliant on the standard and readability of the immediate. A well-crafted immediate gives ample context and steerage, enabling the mannequin to supply related and coherent textual content.

Immediate engineering includes:

- Specificity: Clearly defining the specified output format, fashion, and size.

- Contextualization: Offering ample background data to information the mannequin’s understanding.

- Iterative Refinement: Experimenting with totally different prompts to realize the specified output.

As soon as the immediate is supplied, the mannequin makes use of its realized information to generate textual content. This includes a means of autoregressive technology, the place the mannequin predicts the subsequent token based mostly on the previous tokens, together with the preliminary immediate. This continues till a predefined stopping criterion is met, equivalent to reaching a most size or encountering a particular end-of-sequence token.

4. Output Analysis and Suggestions: Refining the Cycle

The ultimate stage of the Chart GPT cycle includes evaluating the generated output and offering suggestions. It is a essential step for enhancing the mannequin’s efficiency over time. Analysis may be carried out by way of numerous strategies:

- Human Analysis: Assessing the standard, coherence, and relevance of the generated textual content utilizing human judges.

- Automated Metrics: Using metrics like BLEU rating or ROUGE rating to check the generated textual content with reference texts.

- Reinforcement Studying from Human Suggestions (RLHF): Coaching the mannequin to align its outputs with human preferences utilizing reinforcement studying methods.

Suggestions from the analysis stage can be utilized to refine the mannequin’s coaching course of, resulting in improved efficiency in subsequent iterations. This iterative suggestions loop is important for constantly enhancing the mannequin’s capabilities and addressing potential biases or inaccuracies.

5. The Iterative Nature and Future Instructions

The Chart GPT cycle is inherently iterative. The mannequin is continually being refined by way of information updates, retraining, and suggestions mechanisms. This steady enchancment is essential for preserving the mannequin up-to-date and adapting to evolving language patterns and person wants.

Future instructions for Chart GPT and comparable fashions embody:

- Improved Effectivity: Growing extra environment friendly coaching strategies to scale back computational prices and coaching time.

- Enhanced Contextual Understanding: Enhancing the mannequin’s skill to grasp advanced contexts and nuanced language.

- Decreased Bias and Toxicity: Growing methods to mitigate bias and toxicity in generated outputs.

- Multimodal Capabilities: Increasing the mannequin’s capabilities to deal with totally different information modalities, equivalent to photos and audio.

- Personalised Experiences: Tailoring the mannequin’s outputs to particular person person preferences and wishes.

Understanding the Chart GPT cycle gives a deeper appreciation for the complexity and class of those highly effective language fashions. By constantly refining every stage of the cycle, researchers and builders are pushing the boundaries of what is doable with AI, resulting in ever extra subtle and impactful purposes. The journey of Chart GPT, and LLMs typically, is way from over, and the long run guarantees much more thrilling developments on this quickly evolving subject.

Closure

Thus, we hope this text has supplied useful insights into Decoding the Chart GPT Cycle: A Deep Dive into Generative Pre-trained Transformer’s Iterative Course of. We thanks for taking the time to learn this text. See you in our subsequent article!